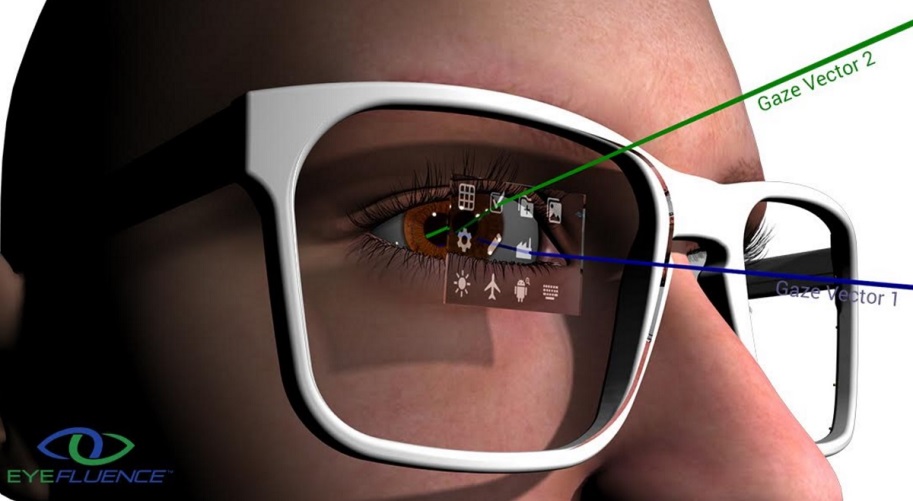

Silicon Valley-based startup Eyefluence has conducted research on controlling a computer screen with your eyes, supporting the company’s goal of hands-free navigation. It has been testing different prototypes with augmented reality glasses outfitted with cameras that track what is seen. To click on an item, you just have to look at it.

“Our prime directive is to let your eyes do what your eyes do,” said Jim Marggraff, founder and CEO of Eyefluence. “We don’t force you to do strange things. We have developed a whole user interface around what your eyes do naturally so that it is fast and non-fatiguing. You can surround yourself with a huge amount of information. We see that as a change in how humans can process information, solve problems, learn, and communicate.”

In the 1990s, researchers at a company called Eye-Com were researching how to control devices with eye control. Their motivation was to use eye interaction to help police officers and firefighters in their line of work. Marggraff met with the company in 2013, eventually acquiring the rights to the technology and starting Eyefluence. The initial device design was small and robust, two key requirements for eye interaction on a head-mounted display (HMD).

With the potential to work in various online circumstances, the hands-free system has a strong reliance on biomechanics. The prototype’s design is dependent on “eye-brain connection,” synching biology and technology to enable a HMD. The company promotes a method of “no wait, no wink, just look” for its eye and screen interaction, also integrating itself into any augmented reality (AR), virtual reality (VR), or mixed reality (MR) platform. When someone using an Eyefluence camera blinks or look away, the device will filter out that direction, and makes notes of factors such as eye color, pupil size, and nose bridge. These are all vital components in helping Eyefluence understand the connection between the brain and the eye.

The team has designed the product to eliminate the clumsiness that can ruin AR experiences. Completing actions on the head-mounted display faster than fingers on a smartphone can, Eyefluence eye interaction also uses Continuous Biometric Identifications (CBID) to replace passwords and logins. Similarly, adding eye interaction’s speed to VR user interfaces adds the further dimension of figures in a virtual world being fully aware of your eye contact. The presence of the Eyefluence glasses also cuts down on VR-induced nausea, as they minimize head movements and adjust for distance between pupils and lens distortion.

The hardware is also capable of identifying someone based on a scan of the iris within 100 milliseconds. A VentureBeat report shared some of the device’s potential functions, including taking a photo of something by looking at it and then identifying on the Internet what the image is. While sharing a heads-up display for an oil rig’s maintenance worker, Marggraff used AR glasses to cross off items on a checklist by looking at each task. While the plans are there, it will still take some time for Eyefluence to integrate fully into AR and VR scenarios.

Hoping to surpass similar technology such as the failed Google Glass, the Eyefluence team is discussing partnerships with Fortune 500 companies.

Source: Eyefluence 1, Eyefluence 2, TechCrunch, VentureBeat, Digital Trends

Advertisement

Learn more about Electronic Products Magazine