Artificial intelligence wrought by machine-learning has advanced so rapidly that notions of ethics lag years behind; this might explain a study out of Shanghai Jiao Tong University in China claiming that computers can tell whether you’ll become a criminal solely based on your facial features.

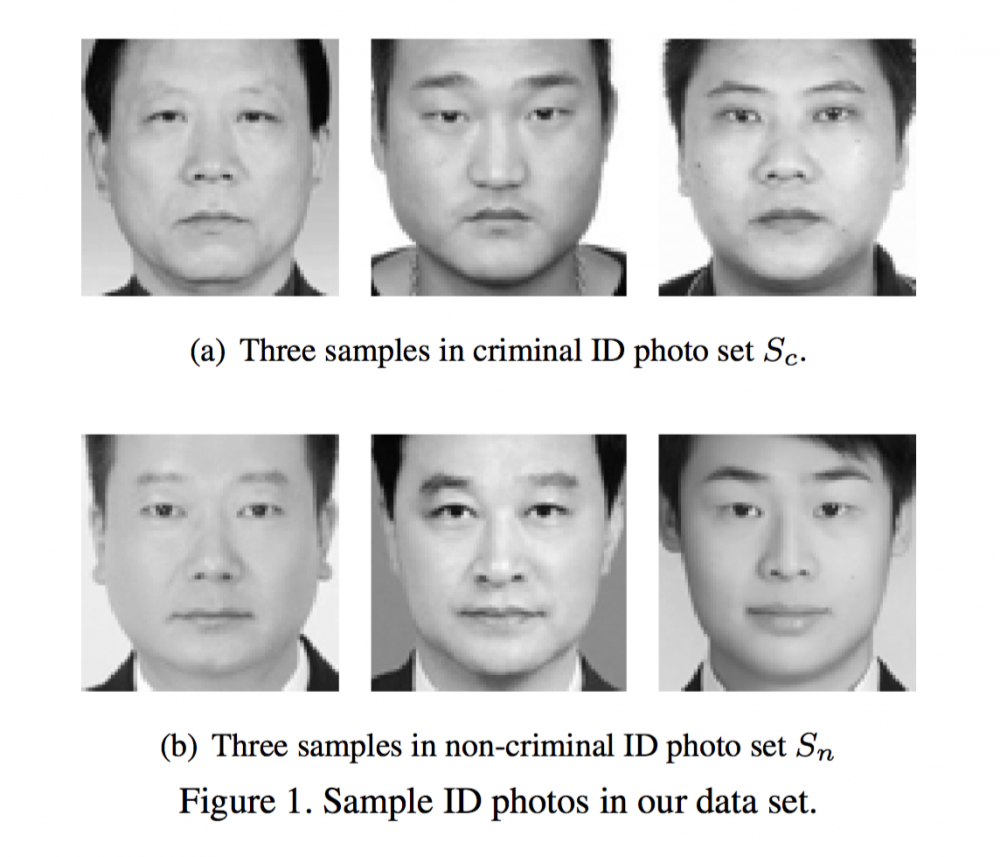

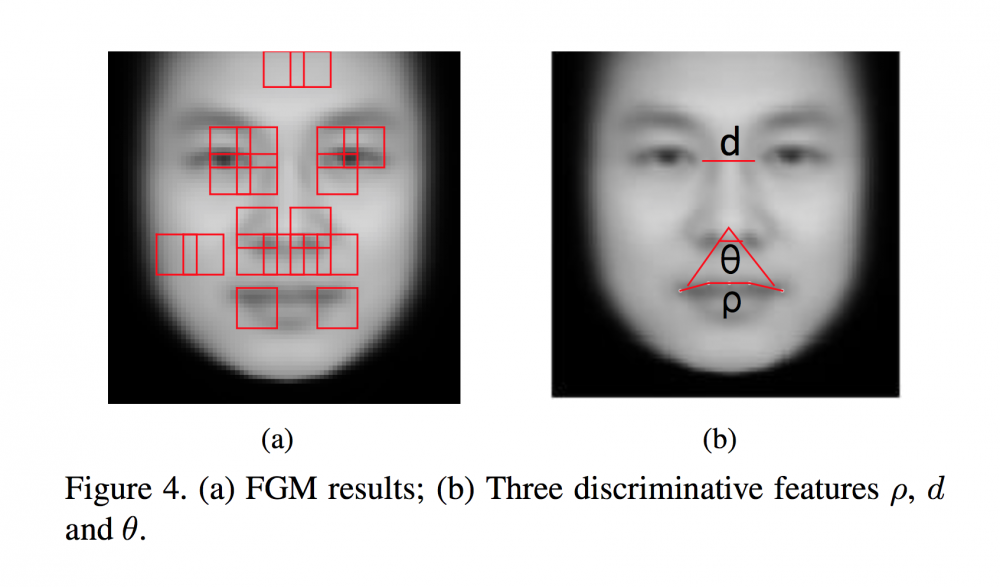

Publishing a paper titled “Automated Inference on Criminality using Face Images,” two researchers combed through the faces of 1,856 real persons using computer software powered by “supervised machine-learning.” After finding “some discriminating structural features for predicting criminality, such as lip curvature, eye inner corner distance, and the so-called nose-mouth angle,” the researchers concluded that “all four classifiers perform consistently well and produce evidence for the validity of automated face-induced inference on criminality, despite the historical controversy surrounding the topic.”

The study references its claim as a “historical controversy” without ever explaining why — namely, that similar claims were made generations ago by eugenicists, attempting to correlate physiology with moral and intellectual qualities. But phrenology, the act of studying the shape of a person’s skull to determine someone’s intelligence, was debunked as pseudoscience. Thanks to the phenomenon known as pleiotropy , we now know that genes are expressed in more than one way or place in the human body. That is to say that a single gene can influence two or more seemingly unrelated phenotypic traits or observable physical or biochemical characteristics of an organism.

Citing that machines are not inherently racist, the researchers defend their position using another discredited argument. “Unlike a human examiner/judge, a computer vision algorithm or classifier has absolutely no subjective baggage, having no emotions, no biases whatsoever due to experience, race, religion, political doctrine, gender, age, etc., no mental fatigue, no preconditioning of a bad sleep or meal. The automated inference on criminality eliminates the variable of meta-accuracy (the competence of the human judge/examiner) altogether.” The researchers ultimately fail to acknowledge that no software is created in a vacuum. Algorithms are human-designed and, therefore, exhibit human bias.

Another flaw resides in the racial make-up of a nation’s criminals and the underlying bias of institutionalized racism. The number of people incarcerated in the United States between 1980 and 2013 rose exponentially, jumping from 319,598 to 1,574,700 . But while incarceration rates rose for all men from 1980 to 2000, the rate was especially steep for uneducated black men, rising from 10% in 1980 to 30% in 2000. Their uneducated white counterparts jumped from about 3% to 7%. And here lies the problem: Any self-learning the algorithm incurs depends on biased data, disproportionately associating the physical characteristics of one group with a higher crime rate than another.

Also present is the potential risk of abuse by law enforcement. Speaking with the Intercept, Kate Crawford, an AI researcher at Microsoft Research New York, MIT, and NYU, cautioned, “As we move further into an era of police body cameras and predictive policing, it’s important to critically assess the problematic and unethical uses of machine learning to make spurious correlations.” United States’ law enforcement agencies have already collected face scans and DNA on half the U.S. adults; factor in police body cameras and bias-driven “predictive” policing software, and we’ll have everyday Americans labeled as potential criminals without ever having committed a crime.

Given the limited sample size and the fact that half of the images used were of convicted criminals — not to mention that the type of criminal offense was omitted — it would not be irrational to suggest that correlation does not imply causation.

Source: The Intercept via Arxiv

Advertisement

Learn more about Electronic Products Magazine