When was the last time you pulled out a map from your glove box in order to figure out how to get from one place to another? When was the last time you went to Mapquest.com to print out your directions to go to a new location?

Thanks to the advancements in technology and precision navigation, we no longer depend on the “old ways” of navigation with maps. These advancements come via revolutionary improvements in the Global Positioning System (GPS), inertial measurement units (IMUs), and global connectivity technologies to provide your smartphone with your position and orientation in order to navigate to your destination.

That being said, there is still a need for higher-precision navigation systems that use a combination of sensors to locate where we are. We see this need in precision today in our smartphones and cars as we see our position jump around on a map while passing under tunnels or freeway overpasses. Solving the challenge of achieving true precision navigation is considered so important that DARPA has ongoing active development efforts to improve navigation — it is considered the “Holy Grail” to have the ability to determine exact location with limited or no GPS/GNSS coverage.

What is precision navigation? In the context of many of today’s applications, precision navigation is the ability of vehicles and operators to continuously know their absolute and relative position in 3D space with high accuracy, repeatability, and confidence. Necessary for safe and efficient operation, precise position data also needs to be available quickly, cost-effectively, and unrestricted by geography.

High-precision navigation solutions need to be able to function well in adverse environmental conditions. (Source: ACEINNA)

There are important applications in numerous industries that require reliable methods of precise navigation, localization, and micro-positioning. Smart agriculture increasingly requires the use of autonomous or semi-autonomous equipment to increase hyper-precision and productivity in cultivating and harvesting the world’s food supply. The impact of logistical efficiency in the warehousing and shipping/delivery industries has become apparent during a global pandemic — warehouse and freight robotics are only as effective as their ability to position and navigate themselves. Autonomous capability in long-haul and last-mile delivery also necessitates precise navigation.

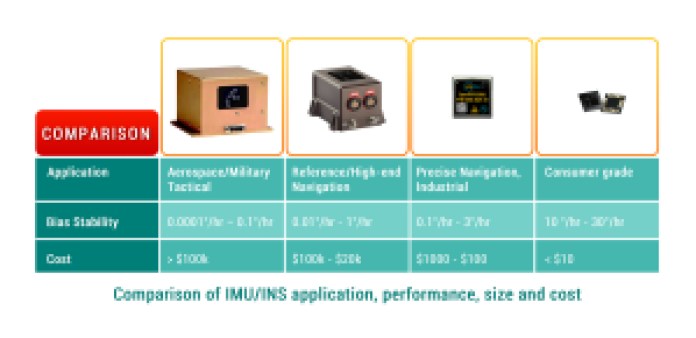

High-precision solutions do exist today and are used in applications such as satellite navigation, commercial airplanes, and submarines. However, these solutions come at a high cost (hundreds of thousands of dollars) and large size (a loaf of bread). The reason for the high cost and size of these inertial navigation systems (INS) lies primarily in the cost and size of the required high-performance IMU, with the gyroscope performance being most critical.

IMU technology has progressively improved the size and cost of hardware, from bulky gimbal gyroscopes to fiber-optic gyroscopes to tiny microelectromechanical systems (MEMS) sensors today. Another aspect to consider is the cost of INS software development and integration as modern applications increase in complexity and sophistication, incorporating artificial intelligence and machine learning to improve decision-making and safety.

Inertial navigation systems and sensors range in performance, size, and cost. Even though originally developed for specialized solutions, all are now found in automotive applications. (Source: ACEINNA)

The key to bringing this technology to everyday life is to preserve performance while simultaneously bringing down cost and size. Doing that allows for new capabilities that are key to advancements in drones, agricultural robotics, self-driving cars, and smartphones.

Limitations of GPS technology today are highlighted by the reasons that cause jumps in your position on a map. Atmospheric interference and outdated satellite orbital path data can be corrected to some extent, which results in a best-case calculated position accuracy of about 1 meter. Compound that with multi-path errors in urban areas, or poor coverage in others, and the receiver’s margin of error increases and position confidence decreases.

This margin of error translates to road-level accuracy, the knowledge of which road a vehicle is probably on. Higher-precision navigation aims for lane-level accuracy or better, the knowledge of position in a lane.

Imagine a person driving down a road, and without trying very hard, the driver is able to observe and maneuver around obstacles such as pedestrians, bicyclists, and potholes without veering out of their lane. Complex intersections with confusing road signs are traversed every day, even in adverse weather conditions. Humans use multiple senses of perception (and depth perception), with accumulated past experiences and practice, to perform the seemingly simple task of driving a vehicle.

There is a wide variety of sensors and sensor types that combine data to provide highly accurate navigation. (Source: ACEINNA)

In an analogous way, autonomous and semi-autonomous vehicles use a suite of perception sensors in conjunction with navigation solutions to maneuver safely from one point to another. With existing limitations in GPS and INS (tradeoff between performance, cost, and size), vehicle systems must employ additional methods to enhance positioning accuracy. Lane-level or centimeter-level position accuracy is important in L2+ automated systems to enable a vehicle to safely navigate in a complex, dynamic environment with minimal human intervention.

One popular method of localization is to use image and depth data from LiDAR and cameras, combined with HD maps, to calculate vehicle position in real time with reference to known static landmarks and objects. An HD map is a powerful tool that contains massive amounts of data, including road- and lane-level details and semantics, dynamic behavioral data, traffic data, and much more. Camera images and/or 3D point clouds are layered on and cross-referenced to HD map data for a vehicle to make maneuvering decisions for vehicle control.

This method of localization, while effective, has its challenges. HD maps are data-intensive, expensive to generate at scale, and must be constantly updated. Perception sensors are prone to environmental interference, which compromises the quality of the data.

As the number of automated test vehicle fleets on the road increases, larger datasets of real-world driving scenarios are generated, and predictive modeling for localization is becoming more robust. However, it comes at the high cost of expensive sensors, computational power, algorithms, high operational maintenance, and terabytes of data collection and processing.

There is an opportunity to improve both the accuracy and integrity of localization methodologies for precise navigation by incorporating real-time kinematics (RTK) into an INS solution. RTK is a technique and service that corrects for errors and ambiguities in GPS/GNSS data to enable centimeter-level accuracy.

RTK works with a network of fixed base stations that send correction data over the air to moving vehicles or rovers. Each rover integrates this data in their INS positioning engine to calculate a position in real time, which can achieve accuracy up to 1 cm + 1 ppm, even without any additional sensor fusion and with very short convergence times.

Integrating RTK into an INS and sensor fusion architecture is relatively straightforward and does not require heavy use of system resources. The use of RTK does require connectivity and GPS coverage to enable the most precise navigation, but even in the case of an outage, a system can employ dead reckoning and the use of a high-performance IMU for continued safe operation.

The benefits of RTK serve to bolster visual localization methodologies by providing precise absolute position as well as confidence intervals for each position datum. This can be used by the localization engine to reduce ambiguities and validate temporal and contextual estimations.

Conclusion

Precise navigation capability is at the core of countless modern applications and industries that strive to make our daily lives better — autonomous vehicles, micro-mobility, smart agriculture, construction, and surveying. If a machine can move, it is vital to measure and control its motion with accuracy and certainty.

Inertial sensors are essential for measuring motion, GPS provides valuable contextual awareness about location in a 3D space, and adding RTK increases reliability and integrity. Vision sensors enable depth perception, which allows you to plan for the future. Data from these various sensors and technologies are combined to enable confidence in navigational planning and decision-making to provide outcomes that are safe, precise, and predictable.

About the authors

Reem Malik is responsible for Strategic Sales and Business Development at ACEINNA, with a focus on precise positioning hardware and software solutions for autonomous applications. She has over 15 years of engineering and sales experience, working closely with global automotive, consumer, and instrumentation customers at Analog Devices and Allegro Microsystems. Reem earned her master’s and bachelor’s degrees in electrical engineering from WPI in Worcester, Massachusetts.

Eric Aguilar has a technical degree (Cal Poly/USC) and started his career by building sensors for drones at U.S. Navy research labs. These sensors were fundamental to unlocking autonomous flight and further advancing drone capabilities. During his time at the Navy, he joined a company that was working on commercializing a sensor that his team worked on. That led to a startup, “Lumedyne,” that built a motion sensor that was later acquired by Google for $85 million.

Eric then transitioned to developing autonomous systems. He was the avionics lead at Google[x] Project Wing and enabled autonomous flight for its commercial delivery drones. He then worked at Tesla (and led a team of 300) on the integration efforts for Model 3 that allowed for autopilot. And he was most recently at Argo AI (a self-driving company funded by Ford and VW) and led the sensor-integration efforts for their fleet of robotaxis.

Eric is now the CEO and co-founder of Omnitron Sensors and an advisor at Ascent Robotics. Omnitron is providing a new degree of freedom to design within the silicon process for sensors. The company is in discussions with leading automotive suppliers and robotics companies to engage in partnerships.

A new book, AspenCore Guide to Sensors in Automotive: Making Cars See and Think Ahead, with contributions from leading thinkers in the safety and automotive industries, heralds the industry’s progress and identifies the engineering community’s remaining challenges.

It’s available now at the EE Times bookstore.

Advertisement

Learn more about AceinnaOmnitron Sensors