Modern communications and sensing technologies have revolutionized the way cars are designed. To increase safety and move toward autonomous driving (AD), new vehicles must include systems that can interact with their surroundings, infrastructure, or other traffic participants to detect possible sources of danger. Therefore, automotive sensors, such as radar and LiDAR, are key to improving road traffic safety and reaching high levels of autonomous driving. Their use will help reduce the number of traffic accidents and deaths on the roads worldwide, with the final objective set by Vision 0: zero deaths in traffic accidents by 2050.

Among the sensors that are considered for assisted and autonomous driving, radar has proved to be extremely reliable for advanced driver-assistance system (ADAS) applications like adaptive cruise control. Applications for radar are expected to expand in the future, as radar is often used together with other sensing technologies, such as video cameras and LiDAR. The advantages and drawbacks of the different sensor types are summarized in the table.

Full AD will most likely require the fusion of data obtained from different sensing technologies. With sensors distributed around the car, it will be possible to provide full 360˚ coverage, creating a safety “cocoon” around the car.

An additional advantage is that radar sensors can easily be installed behind common elements of the car, like bumpers or emblems, so that they are invisible and do not affect aesthetics. The frequency band from 76 GHz to 81 GHz has been accepted by most countries as the frequency band for automotive radars. The physical integration gets easier with high frequencies of operation, as the size of the radar antennas will be reduced. Yet new challenges appear when going up in frequency due to tradeoffs in power, higher losses, and higher impact of manufacturing tolerances.

Radar is also especially suited for automotive applications, as vehicles are good reflectors for the radar waves. It can be used for both “comfort” functions, such as automatic cruise control, but also for high-resolution sensing applications, which add to the passive and active safety of a vehicle. Examples include blind-spot detection, lane-change assist, rear-traffic–crossing alert, and the detection of pedestrians and bicycles near the vehicle.

Nowadays, radar sensors can be classified based on their detection range:

- Short-range radar: up to 50 meters with a wide field of view and high resolution

- Mid-range radar: up to 100 meters with a medium field of view

- Long-range radar: 250-meter or more range, with a narrower field of view and less focused on resolution

With new technology development, the range is expected to extend beyond these limits, while the vertical dimension is added to the detection to provide a full 3D image of the surroundings.

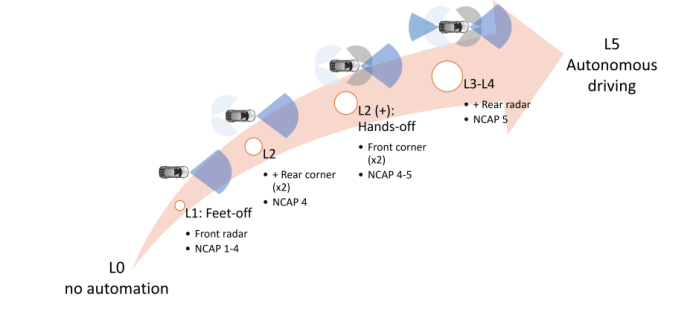

In the future, multiple radar modules will be added to cars to move from a basic forward-looking radar configuration, which provides basic Level 1 AD to Level 4 in the New Car Assessment Program (NCAP). Soon, more cars will be equipped with corner radar sensors to reach Level 2+ AD and NCAP 4–5 in standard cars, and Levels 3–4 in AD and NCAP 5 for the premium segment (Figure 1).

Figure 1: Automotive radar for different NCAP and autonomous-driving levels (Source: Renesas Electronics Corp.)

Yet the way the radar data will be handled will strongly depend on the vehicles’ architecture. The current trends toward increased performance of the central computing units are also driving an evolution of in-vehicle E/E architecture to shift to a distributed architecture.

Although the migration to a fully distributed architecture will not be completed until the 2030s, partial implementations will appear in the market earlier. First, some domain controllers will be used for specific functions, such as ADAS.

In addition, the number of domain controllers will increase, while zone controllers will also be introduced before a fully centralized E/E architecture is established, in which the vehicle’s central computer will be connected to the sensors through the zone control units. This evolution will also require increasing the capacity and reliability of the vehicle’s networks, as well as the software complexity. This may result in significant challenges, including additional connections, with potentially more expensive cables to cope with the increased data-rate demands.

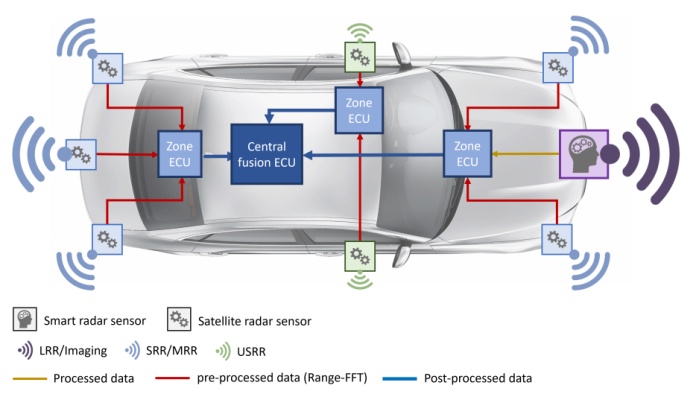

With the new E/E architectures being introduced, part of the radar processing could be offloaded from the radar sensor module (edge computing) to the zone or the central ECU, allowing for more efficient computations. Today, the full radar processing is carried out on the edge, with “smart sensors,” as shown in Figure 2.

This means that a number of independent radar modules are distributed around the vehicle, each of them with their own radar transceiver and processing capabilities. The processed data, normally the object list, is then transferred to an ADAS ECU for further processing and, potentially, fusion with the data from other sensors. With appropriate distribution of sensors in the car, the environment of the vehicle can be properly perceived and obstacles can be identified.

With the development of centralized computing architectures, the processing of the data from some radar modules will probably be shifted from the radar sensor to a remote processing unit — either a zone ECU or the vehicle’s central computer directly. The radar modules themselves would then be less “smart” and perform only a limited amount of processing of the received radar signals.

For example, the module will determine the distance to the different objects and provide the range profiles to the remote processor. It will then receive the pre-processed data from different satellite radar modules and perform the remaining processing steps for each set of data to generate the object list with their respective characteristics (distance, direction, and velocity) and create the full image of the surroundings. The obtained results will be fused together or combined with the results from other sensors. This new, multi-sensing configuration will provide the required accuracy and eliminate redundancies to enable high levels of AD, as shown in Figure 3.

Figure 3: Example of a radar architecture with satellite modules and remote processing on zone-based ECUs (Source: Renesas Electronics Corp.)

In the first implementations of this centralized architecture, the pre-processed data from the different radar sensors can be transferred to zone or central ECUs using the car’s Ethernet backbone. When higher definition is needed and the amount of radar is too large, as in the case of forward-looking or imaging radar, the radar processing may still be carried out on the sensor itself to reduce the amount of data to be transferred.

Centralized processing of data from remote radar sensors offers a wide range of benefits. First, the radar modules themselves become less complex, thus saving size and cost and reducing heat-dissipation problems. Reparations and upgrades, both for hardware and software, become easier.

Second, using the existing network of the car, namely the Ethernet backbone, also reduces the costs and weight for cabling. Additionally, the data transferred through Ethernet will be available in a format that makes them easier to store and work with.

Finally, processing the data on the vehicle’s control units opens the door for higher efficiency and more sophisticated and complex operations. The sensing capabilities can be enhanced by implementing data fusion with the information obtained from other sensing technologies, such as cameras or LiDAR. Machine learning and artificial intelligence can be considered for advanced detection and prediction, thus allowing for higher levels of autonomous driving.

Both edge processing and centralized computing are expected to coexist for some years. The migration to E/E architectures with centralized computing will require access to high-speed links throughout the vehicle, which may lead to the use of different standards for data exchange. In addition to cost and layout complexity, there is no clear winner regarding which standard will be used for the data transmission. While today CAN and Ethernet dominate, some manufacturers are pushing for alternatives like MIPI A-PHY.

In any case, additional security measures become necessary to guarantee the integrity and security of the transferred data. For example, additional processing and memory are needed to transfer data through an Ethernet link, as Media Access Control security (MACsec) and a hardware security module may be required.

The increased number of radar modules on the car itself and on other vehicles, all of them transmitting and receiving radio waves, can also lead to interference problems that will need to be solved. Interference can degrade the detection performance of the radar system, thus reducing the functionality and safety of ADAS and AD systems. Several mitigation strategies are being analyzed, which can be classified into three groups: avoidance, detect and repair, and cooperative, communication-based mitigation.

Radar has become a critical sensor for ADAS and AD applications. Radar imaging systems consist of radar modules that require radar transceivers that cover the full automotive radar frequency band; support short-, medium-, and long-range radar applications; and meet the needs for centralized processing, such as Renesas’s newly introduced RAA270205 millimeter-wave radar transceiver. ECUs are also a big part of ADAS and AD solutions that require advanced system-on-chips, like Renesas’s R-Car Gen4 series, that support centralized processing and enable high-speed image recognition and processing of surrounding objects from cameras, radar, and LiDAR.

Advertisement

Learn more about renesasRenesas Electronics America