The metaverse has evolved as a revolutionary paradigm in the digital realm in conjunction with augmented-reality (AR), virtual-reality (VR) and extended-reality (XR) technologies. The interplay between these technologies has facilitated immersive experiences, impacting various industries and societal dynamics.

The metaverse, a word introduced by Neal Stephenson in his 1992 science fiction novel, “Snow Crash,” denotes a communal virtual environment that transcends the boundaries of the actual world. AR, VR and XR are immersive technologies that modify our experience of reality by combining digital and physical realms. The convergence of these technologies has ushered in a new era of interconnected and immersive experiences, presenting boundless opportunities for creativity.

Components of the metaverse

The design of AR/VR/XR is critical in overcoming conventional limitations of user experiences by providing a dynamic and interconnected environment. Designers face the task of creating experiences that go beyond the constraints of physical space, offering users a captivating and dynamic journey. The design concept of the metaverse centers on optimizing user involvement and creating a digital experience that feels instinctive and seamless, using elements like spatial computing and gesture-based interactions.

Virtual reality

VR immerses viewers in completely synthetic worlds, generating a feeling of being physically present in them. It refers to the use of computer-generated models and simulations to facilitate human interaction with artificial 3D visual or sensory environments. These worlds can be meticulously crafted to authentically replicate real-world locations and events. Simply speaking, VR replaces the real environment with the virtual one.

Augmented reality

AR involves the integration of digital images into our perception of the real world through video or photographic displays. It superimposes digital content onto the physical world, enriching our view of the surroundings by adding digital elements like photos, text, GPS data and animation.

The current distinction between AR and VR is in their respective methods of projection: VR necessitates a wearable headset that completely blocks the user’s vision of the actual world, whereas most AR apps utilize a “window” like a smartphone to overlay digital content onto the real environment. As AR hardware advances and compact head-mounted devices become more comfortable for extended use, both will evolve into wearable experiences.

Mixed reality

MR arises when AR technologies seamlessly blend virtual content with the real environment, allowing for interactive experiences with physical things. In mixed reality, next-generation sensing and imaging technologies let you manipulate physical and virtual objects and environments. MR lets you view and immerse yourself in the world while interacting with a virtual environment with your hands without taking off your headset. It lets you have one foot (or hand) in the actual world and the other in an imaginary environment, breaking down real and fictional concepts and changing how you game and work.

Extended reality

XR is a comprehensive term that encompasses all immersive technologies that expand our perception of reality by either merging the virtual and physical worlds or by providing a completely immersive experience. VR and AR can be considered as subsets of XR, which refers to a range of technologies. The term “XR” commonly encompasses the complete spectrum of VR and AR technology.

What is the metaverse?

The metaverse is the idea that VR and AR experiences will eventually be connected, offering people a way to move from one environment to another within a much larger and interconnected virtual environment. This is analogous to the way people move from one building to another within the same city in the real world.

The metaverse enables the interconnection of VR simulations, allowing individuals to experience co-presence within virtual environments. A person can engage in a virtual experience, while another user can join the same virtual environment from a distant location to participate in the experience together. This presents opportunities for the metaverse to create virtual events encompassing a wide range of activities, including group educational sessions, concerts and art exhibitions.

The metaverse also leverages technologies like AI, language processing, blockchain, digital assets (NFT), 5G/6G and Wi-Fi 6E to construct a virtual environment. It requires minimal delay, virtual computing, storage services and high-speed internet connectivity, and it heavily relies on high-performance computing.

The development of haptic feedback and sensory immersion technologies enhances the realism and immersion of the experience in the metaverse. These technologies allow users to perceive, physically engage with and manipulate digital components.

Headsets are a key component in delivering these experiences. A few examples include Meta’s Meta Quest 3 and Microsoft’s HoloLens 2.

Meta, formerly Facebook, recently introduced Meta Quest 3, announced as the world’s first mass-market MR headset. This device features high processing performance, low latency and a 25-pixel-per-degree 4K+ Infinite Display with a resolution of 2,064 × 2,208 per eye.

At the core of this headset is Qualcomm Technologies Inc.’s Snapdragon XR2 Gen2 spatial-computing platform, specifically designed to meet the requirements of the next generation of MR/VR devices and smart glasses. This single-chip architecture delivers high-quality images, seamless interactions and high power efficiency, removing the requirement for an additional battery pack. The solution embeds AI and low-latency video-see-through technology, providing a highly immersive experience.

Qualcomm has recently announced the “+” version, the Snapdragon XR2+ Gen2, that supports 4.3K per eye resolution, 12 or more concurrent cameras and 90 fps. The new chip is the result of Qualcomm’s collaboration with Samsung and Google, which will use this hardware platform to provide increasingly immersive and spatial solutions.

Microsoft HoloLens 2 is another MR headset. An important improvement in HoloLens 2 is its expanded field of view, which offers users a more immersive and realistic experience. Another noteworthy aspect is its ability to track hand movements. The device uses a blend of sensors and cameras to accurately monitor the user’s hand motions, allowing for natural and engaging gestures.

The HoloLens 2 operates using a specifically designed Microsoft Holographic Processing Unit (HPU) and a Qualcomm Snapdragon 850 processor. This hardware combination enhances performance, allowing for the execution of more intricate and lifelike holographic encounters. The head-mounted device is engineered to enhance the efficiency and effectiveness of daily tasks performed across several industries. An important application of HoloLens 2 is in advanced training, where AR is used to instruct trainees in their work environment by guiding them through digital processes.

Impacts on industries

The AR/VR/XR and metaverse technologies are becoming essential for numerous industrial sectors, providing significant advantages in design review, training, remote sales, e-commerce and brick-and-mortar retail operations. Here are several examples:

- Education and training: AR, VR and XR technologies change education and training through the provision of realistic simulations and immersive learning environments. These tools improve the development of skills and the retention of knowledge across various fields, including medical training and industrial simulations.

- Gaming and entertainment: The gaming industry has rapidly adopted the metaverse, offering gamers rich and interactive experiences. Entertainment is being redefined by virtual concerts, interactive storytelling and collaborative gaming experiences.

- Business and collaboration: Metaverse technologies allow teams in different locations to collaborate in virtual environments, enabling virtual conferences, collaborative design environments and immersive presentations.

Industrial applications, in particular, is one area where there is a lot of innovation. For example, Nvidia Omniverse is a flexible development platform specifically created for constructing 3D workflows, tools, applications and services in a modular fashion. It uses Pixar’s Universal Scene Description (OpenUSD) and Nvidia AI technology to enable developers to create real-time 3D simulation solutions for industrial digitalization and perception AI applications.

There are several projects or collaborations already underway.

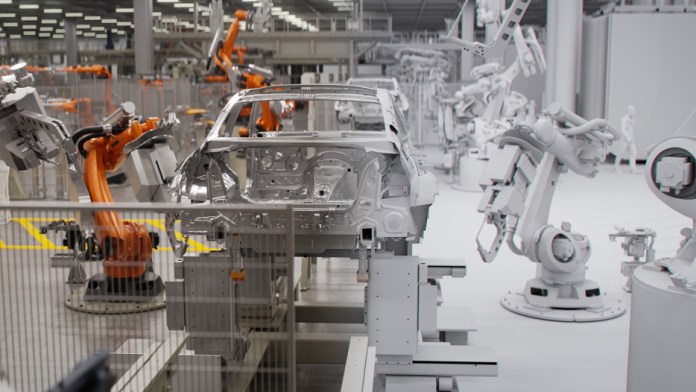

The BMW Group is using the Nvidia Omniverse Enterprise platform for building and operating 3D industrial metaverse applications to virtually validate and optimize manufacturing systems at its future automotive manufacturing plant in Debrecen, Hungary, the company’s first facility to be planned and validated virtually. The platform is used to run real-time digital-twin simulations to optimize layouts, robotics and logistic systems virtually.

The BMW Group is transforming the design of complex manufacturing systems using Nvidia’s Omniverse platform. (Source: BMW Group)

Siemens AG is also taking the same approach. In 2022, the company announced a collaboration agreement with Nvidia to use the Omniverse platform to build digital twins embedded in the industrial metaverse. By connecting the Siemens Xcelerator, an open digital platform, with Nvidia Omniverse, it will be possible to create a real-time and immersive VR world that seamlessly integrates hardware and software, spanning from the outermost devices to the cloud.

More recently, Sony Corp. unveiled a collaboration with Siemens at CES 2024 to introduce a cutting-edge solution that integrates Siemens Xcelerator industry software with Sony’s innovative spatial content creation system. This system includes the XR head-mounted display, equipped with high-quality 4K OLED microdisplays, as well as controllers for seamless interaction with 3D objects. The novel solution will initiate the process of generating content for the industrial metaverse.

Lam Research Corp. has developed Semiverse, the semiconductor metaverse, to enable a virtual fabrication environment in the semiconductor industry. The Semiverse is a simulated environment where development and testing are carried out collaboratively by humans and machines.

Semiverse can expedite chip innovation and reduce the duration between the conception of a technology and its commercial availability. It also can enable enhanced collaboration to expedite the industry’s delivery of innovative technology at a more frequent pace. The digital depiction of the whole chip fabrication process in the Semiverse will enable chipmakers to analyze the interactions between processes across the fab, leading to enhanced repeatability and optimized yield.

Lam Research’s Semiverse Solutions delivers building blocks for virtual semiconductor fabrication for training, engineering and manufacturing applications. (Source: Lam Research Corp.)

While the metaverse can have a widespread impact across industries, including the industrial metaverse, developers still face major hurdles. Addressing technical challenges like latency, hardware limitations and interoperability is critical for the widespread adoption of metaverse technologies.

Developers also need to address higher computing capability, lower latency, a more powerful network infrastructure, as well as high-resolution displays and optics. There are also challenges around privacy and security as well as regulations and standards.

Advertisement

Learn more about BMW GroupLam Research Corp.MetaMicrosoftNVIDIAQualcommSiemens AGSony Corp.