BY JOEL WOODWARD

Senior Product Manager, Oscilloscopes

Agilent Technologies

www.agilent.com

Oscilloscope users rely on top-level specifications in determining the value of one oscilloscope relative to another. Three key specifications – bandwidth, sample rate, and acquisition memory depth – typically get top consideration when choosing which scope to use.

It's generally easy to grasp the value of additional bandwidth and faster sample rate. But while acquisition memory depth is often used as a primary purchase consideration (with bigger being better), fully understanding the benefits associated with memory size requires additional thought.

Deep memory offers considerable value in three areas that are often overlooked: capture period, sample-rate maintenance, and measurement/analysis quality. Understanding the relationship between these areas and memory lets the user make smarter choices.

Capturing longer periods

The most obvious benefit of deep acquisition memory is the capture of longer periods of time: deep memory helps in instances where the cause and effect may be separated by a significant time period, and plays a key role in viewing events that simply take a longer time to transpire.

Let's look at a few examples. Since the acquisition time window is equal to the memory depth divided by the sample rate [(Acquisition Time Window) = (Memory Depth)/(Sample Rate)], a scope with memory of 100 Mpoints per channel with a sample rate of 10 Gsample/s per channel can capture 10 ms of time on each channel. (Note that, for scopes, points and samples are equivalent). If the same scope had just 1 Mpoint of memory per channel, it could capture only 0.1 ms of time at the same sample rate.

A general rule of thumb is, the faster the sample rate, the deeper the memory must be to capture the same time window. There are a wide variety of applications and tests that benefit from longer time captures, so having deep memory provides more flexibility for engineers when they encounter applications that require deeper memory.

One method of capturing longer windows of time is changing the scope's acquisition mode to segmented memory. Advanced scopes from major vendors included a mode that allows memory to be partitioned into smaller segments. With segmented memory, the user specifies how many segments the memory should be divided into, with each segment having equal length. When the scope sees the first trigger event, it fills the first segment of acquisition memory. Once this segment is full, the scope starts a counter and looks for the next trigger event. When this second trigger event occurs, it stores samples to the next segment of memory. This process repeats until all segments are full, or until the user tells the scope to stop looking for additional trigger events.

Segmented mode is particularly useful for acquiring signals when there is significant time between trigger events of interest, a category into which many serial busses, optics, and communication signals fit. As opposed to normal mode, in which scopes with deep memory and high sample rates can capture and store a few milliseconds, some scopes using segmented memory can capture time windows that span seconds, hours, or even days and still maintain fast sample rates (Fig. 1 ).

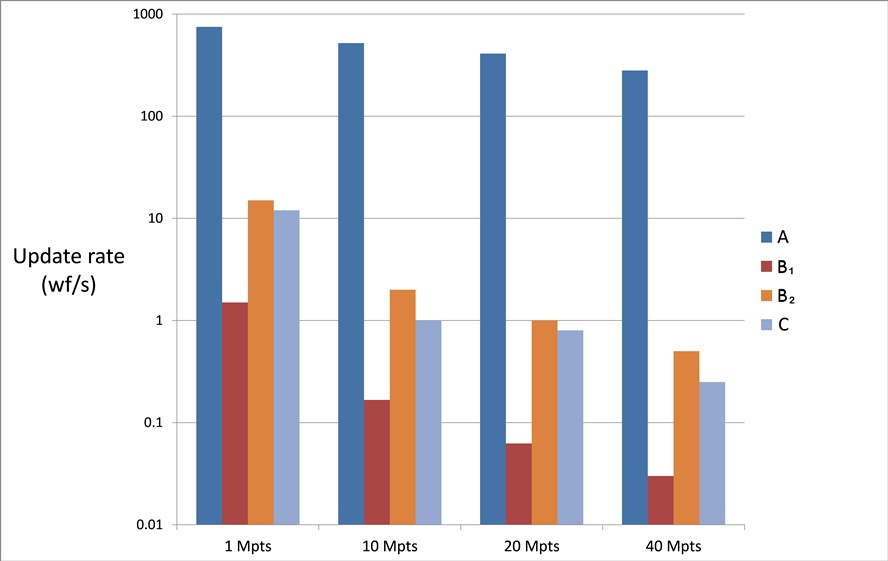

Fig. 1: A scope's architecture determines how responsive the scope is when deep

memory is enabled. Update rate, how many waveforms per second a scope can

capture, and process per second, is a good measure objective measure of how well

the scope handles deep memory. As noted in the graphic, with update rate shown

on a log scale, there is a huge variation in update rate between scopes as deep

memory is turned on.

How does deep memory enhance segmented memory? First, users can choose to use the additional memory to capture an incremental number of segments; on Agilent's Infiniium scopes, for example, users can specify that memory be divided into up to 131,000 segments! Second, for a given number of segments, users can increase the memory depth of each segment, providing the ability to see more signal activity around each trigger point; with 1 Gpoint of memory per channel and 1,000 segments, each segment can be 1 Mpoint – a decent amount of memory and time capture per segment.

Maintaining fast sampling

The second primary advantage of deep acquisition memory is the ability to maintain a fast sample rate over a larger number of timebase settings. Engineers tend to think of oscilloscope specifications as values that remain constant, independent of how scope controls are set. Unfortunately, this isn't the case. Let's take a quick look at how memory depth can impact the sample rate and overall bandwidth of an oscilloscope as the user changes the horizontal timebase.

Most scope users don't think about this aspect of acquisition memory. In fact, the value of deep memory to preserve higher sample rate and hence higher bandwidth over a larger number of timebase settings is not commonly considered. However, it makes a huge difference in the ability of the scope to maintain its other key specification, sample rate and bandwidth.

Let's look at a real-life example. An engineer chooses a 4-GHz-bandwidth oscilloscope equipped standard with 10 Mpoints of memory per channel, and a maximum sample rate of 10 Gsamples/s. These specifications appear bullet-proof and the engineer begins using the scope. The engineer chooses a fast 10 ns/div timebase setting. The scope samples at 10 GSa/s and uses just 1 Kpts of memory. The entire 4 GHz of effective bandwidth is preserved as the engineer anticipates.

Remember the formula, (Acquisition Time Window) = (Memory Depth)/(Sample Rate)? The engineer needs to see more time on screen and thus turns the horizontal timebase knob to slower settings; the engineer chooses 200 µs/div. To fill 10 horizontal divisions sampling at 10 Gsamples/s, the scope needs 20 Mpoints of memory. Since only 10 Mpoints are available, the scope compensates by dropping the sample rate by a factor of two, to 5 Gsamples/s. The scope can now acquire 10 Mpoints of samples and fill all ten horizontal divisions with signal values. The engineer needs to see even a bigger time window at once, and hence changes the scope's timebase to 1 ms/div. As the scope has just 10 Mpoints of acquisition memory, to capture 10 ms of activity, the scope must drop its sample rate again, this time to 1 Gsample/s. As the engineer changed the timebase setting, the 10-Mpoint memory limitation impacted the scope's sample rate and overall effective bandwidth.

The user did not realize that by changing the horizontal timebase control with a fixed amount of memory, the scope compensated by decreasing sample rate as well as the overall effective bandwidth of the scope. The front end of the scope was still letting frequencies up to 4 GHz pass through. However, the scope will now alias signal frequencies, since the scope is no longer sampling fast enough. The scope industry has standardized on sample rate being 2.5 times bandwidth. So, while the front end of the scope still allowed signal frequencies of up to 4 GHz to pass through to the scope's ADC, the converter only had sample rate sufficient for 400-MHz bandwidth, so signal components with frequencies greater than 400 MHz will be aliased. The user reduced the effective scope bandwidth from 4 GHz to 400 MHz by changing the timebase setting, and so can no longer rely on accurate measurements or accurate signal viewing using the scope. This measurement error was facilitated by the scope having only 10 Mpoints of maximum memory.

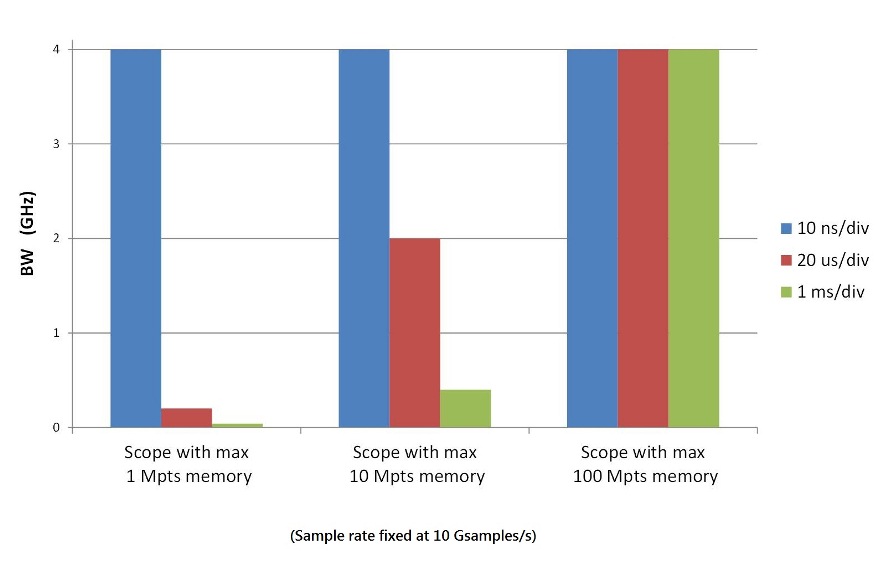

What if the scope had a memory of 1 Gpoint instead of 10 Mpoints? At the slower timebase setting of 10 ns/div, the scope would retain the maximum sample rate of 10 Gsamples/s and hence would not alias signals up to the rated 4-GHz bandwidth of the scope. At the slower sweep speed of 1 ms/div, or even up to 5 ms/div, the scope can still acquire at 10 Gsamples/s and hence will accurately represent signal up to the full 4-GHz bandwidth of the scope. Surprisingly, memory depth is indeed linked with effective scope bandwidth when horizontal timebase settings are changed. Scopes with deeper memory depth preserves high sample rate and the ability to use the full rated bandwidth of the scope over a larger range of timebase settings (Fig.2 ).

Fig. 2: Having deeper memory enables the scope to retain a faster sample rate

and full scope bandwidth as the user adjusts the timebase control to slower

settings.

Get better measurement and analysis

The third primary value of deep memory is quality of measurements. Have you ever considered if taking rise time measurements on 1,000 acquisitions each with 1K memory (1K x 1,000) yield better results than taking rise time measurement on a single acquisition of 1 Mpoints? Ever wonder how an FFT with 10 kpoints of memory differed from one with 10 Mpoints? Ever considered how deep versus shallow memory impacts jitter characterization? These are great questions, and deep memory has an impact on all of them.

How much memory the scope uses for analysis is a balance. If set too low, the scope will have difficulty providing meaningful analysis. For example, when doing eye recovery and jitter, if the memory is set too low the scope can't do PLL clock recovery, as it won't see enough edges. Also, shallow memory inhibits statistically valid analysis; such functions as FFTs and histograms won't have enough points on which to operate (Fig. 3 ). If the memory is set too high, the tradeoff is that the scope's responsiveness suffers, as processing times for analysis increases significantly.

Fig. 3: FFTs and other functions yield better results when more points are

used. For FFTs, resolution bandwidth is equal to the sample rate divided by the

number of points in the FFT. So, to achieve more precise resolution bandwidth,

the scope must acquire and process more samples. The scope screen shot above

shows the difference between an FFT made on a 100-kpoint acquisition, and one

made on a 10-Mpoint acquisition of the exact same signal.

As scope users can manually control how much memory to enable on most scopes, when to enable the additional memory depends. Too little and your scope will capture shorter windows of time, will be more susceptible to sample rate and bandwidth reduction as the timebase changed to slower settings, and will not provide good analysis results.

Too much memory and your scope will spend more time processing the acquisition making the scope more sluggish. While you may not want to have deep memory turned on all the time, having the additional memory available on your scope is a huge benefit for times that you need it for debug and testing.

Advertisement

Learn more about Keysight Technologies, Inc.