By Scott Seiden, software solutions senior manager, Keysight Technologies, Inc.

Electronics are getting more sophisticated and complex every day. Chips, boards, and the systems they comprise are increasingly difficult to design. Companies engaged in electronic product development have regularly employed platform-based approaches in their design projects for more than two decades since the inception of the CAD Framework Initiative (CFI). Platform-based design offers many benefits including faster time to market, increased engineering productivity and cost efficiency, improved design reuse, and faster analysis and insight that supports better decision-making.

Beyond these benefits, the trend toward disaggregation of the semiconductor and electronics industries served as a major driver for platform-based design. The demands of higher design complexity made vertical integration of the entire development and manufacturing process impractical — hence, the rise of fabless semiconductor companies, chip foundries, and contract electronics manufacturing services businesses. With disaggregation came the ability to focus engineering resources on a company’s core competency and competitive market differentiation. Design platforms that deliver a complete solution for a particular problem or domain area help to reinforce core competencies.

If platform-based design is so successful, why are platform-based test approaches not more widely available and adopted? After all, product designs must be tested before going to market. The same electronics industry trends apply in the case of test. So what is stopping test teams from benefiting from the same type of platform approach as design teams? The answer is siloed workflows and homegrown test environments that inhibit easy data sharing.

Much like early computer-aided engineering and design products (CAE/CAD), which were proprietary in nature, electronic test and measurement is a closely held business. Many companies build proprietary, in-house methodologies and tools to tackle their specific test challenges without regard for the resources required to maintain them. Recent Keysight research results reported in “Realize the Future of Testing and Validation Workflows Today ”confirmed this point, with 91% of respondents saying they created in-house tools for testing and verification. Test engineers follow tried-and-true methods. They resist change from legacy ways to a more open and connected approach.

In the design world, interoperability standards and tool frameworks accelerated the movement to open systems and software solutions. They bridged the gap between logical and physical abstractions and their respective workflows. The collective work of the CAD Framework Initiative during the early and mid-1990s helped make EDA tools and methods more accessible and interoperable. Frameworks also provided the means to better manage the ever-growing volumes of design data. While the design world is now accustomed to the benefits associated with interoperability and platform-based methods, the test world still suffers from silos and poor handoffs between different steps in the work process.

Test platform and framework architecture requirements

Because the design world adopted open frameworks and interoperability, the test community should embrace a platform-based approach built around open frameworks and interoperability, too. For example, Keysight and Nokia recently launched OpenTAP , the open Test Automation Project, as a first step in this direction. OpenTAP provides an open-source, scalable architecture that enhances and accelerates the development of automation solutions within the test and measurement ecosystem, with demonstrated success in 5G network equipment manufacturing.

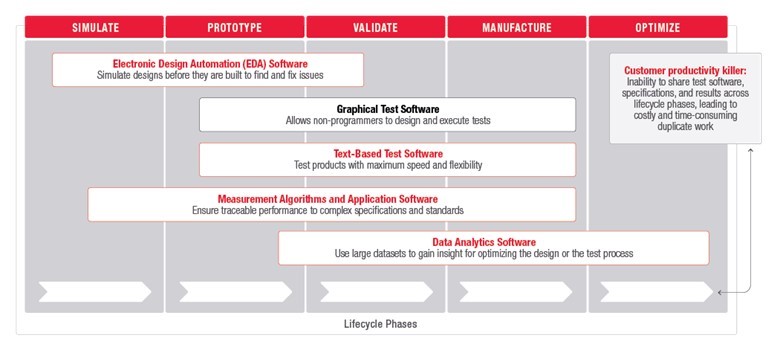

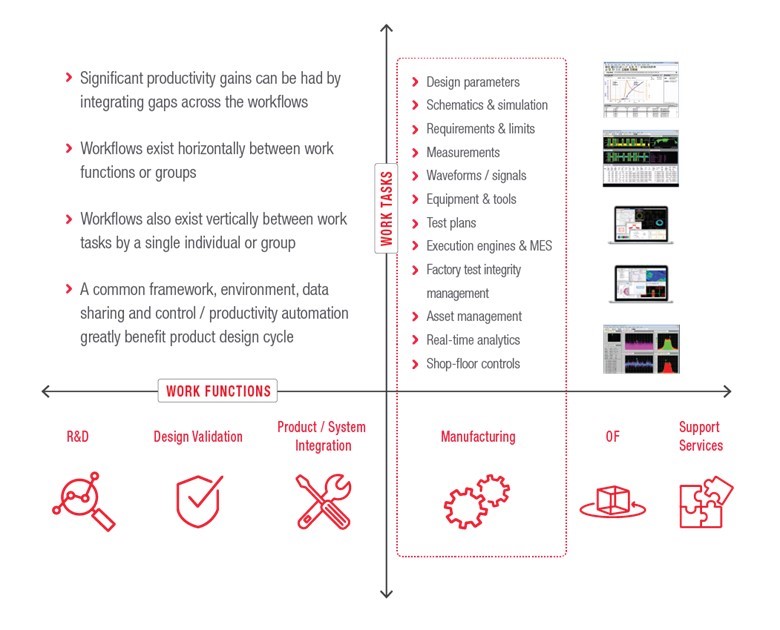

While test automation is a necessary component of a platform-based solution, it does not address the complete workflow or end-to-end architecture. To enable faster solution development, maximize productivity through connected workflows, and integrate insights into operational systems, a platform-based test solution should cover the full development life cycle (Fig. 1 ). It should also consider both horizontal work functions and vertical work tasks (Fig. 2 ).

Fig. 1: Test platforms must cover the whole electronic product development life cycle, from simulation to optimization.

Fig. 2: Test platforms must incorporate both horizontal work functions and vertical work tasks.

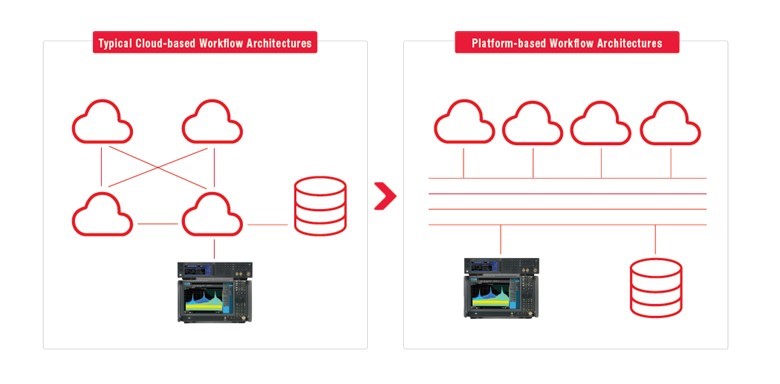

To support both horizontal work functions and vertical work tasks, platform-based test architectures need a new framework that is open, flexible, and scalable (Fig. 3 ). The central elements in a new framework are a high-performance communication fabric and an easy-to-use integration kit that allow rapid “plug and play” between homegrown tools and commercially available tools. The infrastructure mechanism is the connecting fabric that streamlines the necessary transactions between participants in the platform. The integration kit is a set of rules and programming interfaces used to connect application plug-ins with the fabric.

Fig. 3: Platform-based test architectures must feature an open, flexible, and scalable connecting fabric.

In addition to providing the proper connections, the framework must foster the exchange and co-creation of value between users. The framework should attract system engineers, hardware engineers, lab managers, test planners, and system administrators to use and contribute to it for technical and business purposes. When the framework engages users by delivering compelling value connections, it turns community members into both producers and consumers of new capabilities and data not possible with homegrown solutions.

Also, key to a framework’s success are a common data model and user experience. A common data model allows producers to quickly develop and plug in their own unique intellectual property (IP) to the framework. Once the IP is connected to the framework, consumers can deploy solutions and workflows. A common user experience provides a centralized view into the platforms and their management.

Platform-based design and test workflow vision

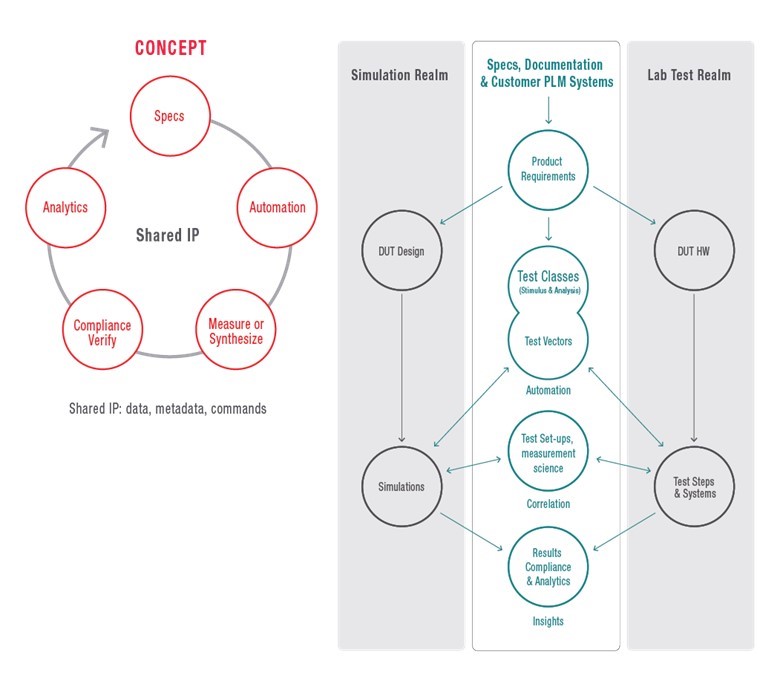

As an example of an ideal platform concept, consider a specification-driven workflow that starts and ends with the actual product specification, linking simulation with reality. The specification and test vectors assist in automation, measurement, compliance, and analytics (Fig. 4 ). Engineers break down product specifications into requirements and then automatically translate test classes/vectors into a format that all framework plug-ins understand and share throughout the development life cycle.

Fig. 4: Connecting design and test environments using a common data model and user experience supports creation of specification-driven workflows.

Automated translation of test classes and vectors into test plans drives both simulations and lab tests, eliminating a time-consuming, manual process and providing consistency in the way that engineers perform tests in both environments. Typically, they model test setups in simulation, but it is not easy to share the same measurement science to reduce the variance in simulation to test correlation. To reduce correlation variance, engineers use the same stimulus and analysis software plug-ins for both simulation and test to stimulate and analyze the device under test (DUT). A common design and test platform improves test lab correlation to simulated results and also accrues significant benefits from software asset reuse.

In traditional siloed workflows, volumes of simulation and test data reside in a file that must be manually sorted, which is time-consuming and mistake-prone. A platform-based workflow tags all results with relevant data, which includes but is not limited to date, product version, workspace, test performed, test vector parameters, etc. Because the results are tagged, engineers can tell which test is associated with the data and how it was done.

Next, engineers can automatically populate a compliance matrix with pass/fail criteria and summary results. For deeper analysis, engineers can click on any test result in the compliance matrix to open a waveform viewer. Then engineers can view a waveform for a given test against any other waveform of the same test, regardless if it is simulation or test data, to perform comparisons and gain critical insights.

More engineers and their managers are recognizing how a platform-based test workflow breaks down homegrown test environment silos and fosters software reuse. To achieve results that accelerate time to market and dramatically improve productivity, test professionals need a new interoperability framework founded on an open, flexible, and scalable architecture. Key framework characteristics include a high-performance communication fabric, easy-to-use plug-in integration kit, and common data model and user experience. Engineers and test operations executives who want to maximize return on investment while focusing on their core competencies must look to a platform-based test approach that leverages the best of homegrown and third-party IP.

About the author

Scott Seiden is software solutions senior manager at KeysightTechnologies, Inc. He has spent more than 25 years working in the EDA, semiconductor, and networking industries for global market leaders such as Cadence, Xilinx, and Cisco. At Keysight, Scott works on the PathWave software solutions team focused on accelerating customers’ design and test workflows.

Advertisement

Learn more about Electronic Products Magazine