By Majeed Ahmad, contributing writer

Smooth developer experience is fundamental in artificial intelligence designs. Development toolkits can streamline the preparation of trained neural networks for edge and low-latency data-center deployments.

Production-grade AI development kits have become crucial in overcoming the software support limitations and barriers to AI adoption, especially when it comes to accelerating edge inferences in endpoint devices limited by size and power usage. That’s where the AI development kits bridge the gap between popular training frameworks such as TensorFlow and highly constrained edge and IoT deployments.

Take the example of the Mythic SDK that the AI specialist has developed for its intelligence processing unit (IPU) platform, targeted at smart camera systems, industrial robots, and intelligent home appliances. The Mythic IPU architecture features an array of tiles, and each tile has three hardware components.

First, a large analog compute array stores the bulky neural network weights. Second, there is a local SRAM memory for data being passed between the neural network nodes. Third, a single-instruction multiple-data (SIMD) unit caters to processing operations not handled by the analog compute array, and a nano-processor controls the sequencing and operation of the tile.

The Mythic SDK software runs through two stages: optimization and compilation. The Mythic Optimization Suite transforms the neural network into a form that is compatible with analog compute-in-memory, including quantization from floating-point values to an 8-bit integer.

The Mythic Graph Compiler performs automatic mapping, packing, and code generation. The Mythic SDK generates and compiles the binary image to run on the IPU architecture. Next, the conversion from a neural network compute graph to machine code is handled in an automated series of steps that includes mapping, optimization, and code generation.

As a result, developers have a packaged binary containing everything that the host driver needs to program the accelerator chip and run neural networks in a real-time environment.

Neural nets in embedded systems

Microcontroller supplier STMicroelectronics has also introduced a toolbox that allows developers to generate optimized code to run neural networks on its microcontrollers (MCUs). Developers can take neural networks pre-trained on popular libraries such as Caffe, Keras, TensorFlow, Lasagne, and ConvNetJS and then convert them into C-code that can run on MCUs.

That’s how AI — commonly working with nearly unlimited cloud resources — can efficiently operate on embedded platforms with compute and memory constraints. The toolkit enables AI by employing a neural network to classify data from IoT nodes like sensors and microphones instead of using conventional signal-processing techniques.

STMicroelectronics has added AI features to its STM32CubeMX MCU configuration and software code-generation ecosystem. It comes in the form of STM32Cube.AI that serves as an expansion pack to the STM32CubeMX code generator. STM32Cube.AI runs on the company’s STM32 MCUs and is available as a function pack that includes example code for human activity recognition and audio scene classification.

Fig. 1: The STM32Cube.AI extension pack, which can be downloaded inside the STM32CubeMX code generator, supports Caffe, Keras with TensorFlow backend, Lasagne, and ConvNetJS frameworks as well as IDEs including those from Keil, IAR, and System Workbench. (Image: STMicroelectronics)

The STM32CubeMX code generator maps a neural network on an STM32 MCU and then optimizes the resulting library, while the STM32Cube.AI function pack leverages ST’s SensorTile reference board to capture and label the sensor data before the training process. The reference board runs inferences enabled by the optimized neural network. The code examples are also usable with the SensorTile reference board and ST’s BLE Sensor mobile app.

Machine-learning toolkit

Another notable AI development kit also comes from an MCU supplier. NXP Semiconductors’ eIQ is a machine-learning (ML) toolkit that supports TensorFlow Lite, Caffe2, and other neural network frameworks as well as non-neural ML algorithms. It facilitates model conversion on a wide range of neural network frameworks and inference engines for voice, vision, and anomaly detection applications.

The eIQ software environment provides tools to structure and optimize cloud-trained ML models so that they can efficiently run on resource-constrained edge devices for a broad range of industrial, IoT, and automotive applications. It also features data-acquisition and -curation tools as well as support for neural network compilers such as GLOW and XLA.

The eIQ toolkit, which supports NXP’s MCU and applications processor lineups, also enables designers to select and program embedded compute engines for each layer of a deep-learning algorithm. The automated selection process significantly boosts performance and lowers time to market.

NXP has expanded the eIQ machine-learning development environment to automotive AI designs with the launch of eIQ Auto . The automotive-grade toolkit enables developers to implement deep-learning–based algorithms to vision, driver replacement, sensor fusion, driver monitoring, and other evolving automotive applications. The inference engine in eIQ Auto is compliant with Automotive SPICE.

Arm’s AI toolchain

Both AI development platforms, NXP’s eIQ and ST’s STM32Cube.AI, are associated with Arm’s ecosystem. Arm’s Cortex-M55 processor, for instance, is supported in the STM32Cube.AI toolset. Likewise, NXP is incorporating Arm’s Ethos-U55 machine-learning accelerator into its MCUs and application processors.

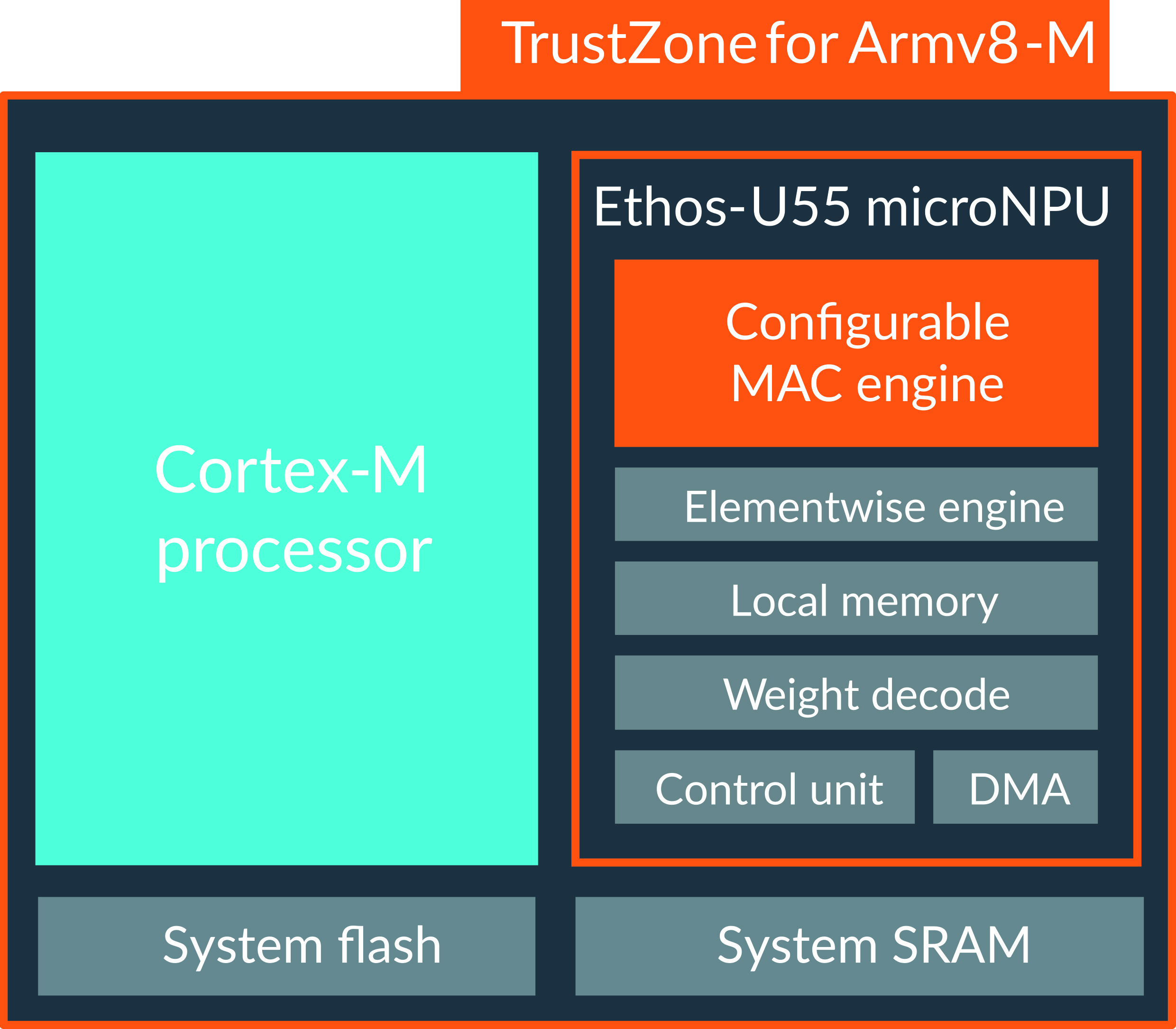

Arm’s Cortex-M software toolchain features support for Cortex-M55 and Ethos-U55. Cortex-M55 — compared to the previous Cortex-M version — delivers up to a 15× uplift in ML performance and a 5× uplift in DSP performance. And Cortex-M55 can be easily paired with the Ethos-U55, Arm’s microNPU designed to accelerate ML inference in area-constrained embedded and IoT devices.

Fig. 2: The Ethos-U55 machine-learning accelerator works alongside the Cortex-M core to shrink the AI chip footprint and thus efficiently serve constrained embedded designs. (Image: Arm)

Arm’s Cortex-M software toolchain aims to create a unified development flow for AI workloads while optimizing the leading machine-learning frameworks. For instance, Arm has started the optimization work with TensorFlow Lite. Google and Arm have joined hands to optimize TensorFlow on Arm’s processor architectures for enabling machine learning on power-constrained and cost-sensitive embedded devices.

Advertisement

Learn more about ARMNXP SemiconductorsSTMicroelectronics